Functionnal Explications

In this github you can find an application to monitore your hives.

Lets imagine you have a scanner in the entry of the hive that give you data of what's scanned

This scanner stream the data to your kafka broker and you can handle your scenarios

In this project, the informations we are handling are

- monitoring how much bees enter and leave the hives

- tracking the number of asian-wasp that came to kill our bees

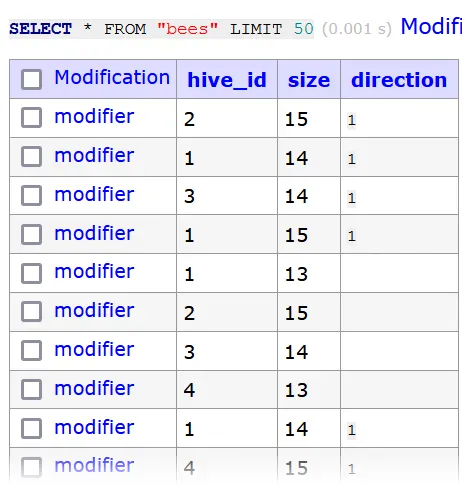

- a sink kafka-connect-jdbc to store the data of your bees

I used Ebiten for the graphical part Kafka to stream the data

Technicals explications

requirements

you need

kafka

docker-compose up -d kafka

this will start zookeeper, schema-registry (help to specify the format of your data), akhq (monitoring the data inside kafka)

schema-registry

The schema-registry will host the format of our value in Avro format. This will securise our data and thanks to the encoding speed the stream.

what our value will look like ?

Everytimes an insect go near the scanner, it will get

- a hashmap of the colors in format ["color_name"]percentage

- the size scanned

- the direction (entering or leaving)

- if wings have been detected (could be usefull for making alert on bear paws ...)

We can deduct the "schema-key" & "schema-value"

You can insert now the european-bee-value it will be usefull for the kafka-connect later (this one too, to be using with kafka connect later)

to post this on the schema-registry we use the api

post SCHEMA_REGISTRY_URL/subjects/SCHEMA_NAME/versions { "schema": "YOUR_SCHEMA_ESCAPED"}

! The schema's "names are hardcoded" please use the schema name : "detected-key", ""detected-value & ""european-bean-value.

to build your api request can go on my avro-converter, it will transform your avro-schema to a curl usable with the schema-registry or you can import the postman-collecttion TODO url

response should be an ID

{"id": 1}

start

OK, now start the program ! in the go folder

go run .

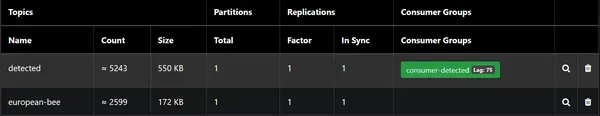

Now let's verify our scanner is working in Akhq.

localhost:8085

in the topics page

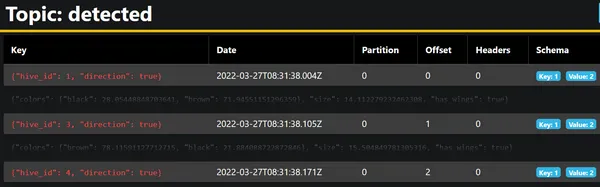

if you click on topic detected

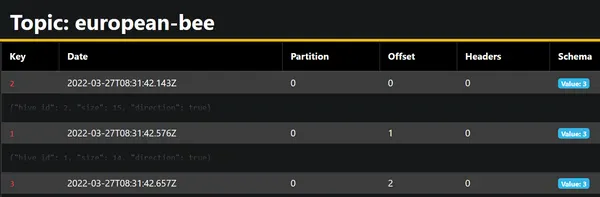

if you click on topic european-bee

the topic detected contain the data scanned

the topic detected contain the data scanned

OK but what's happening ?

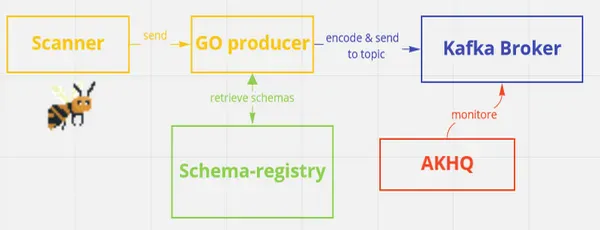

Our scanned detected something to be scanned, he send the data to the producer

The producer will publish on topic detected but he needs the avro-schema

He will ask for the schemas and encode the data to avro-format

He publishes the kafka-message and now we can see it on AKHQ on the topic detected

The schema tab on AKHQ represent the schema'ID you received when you posted it

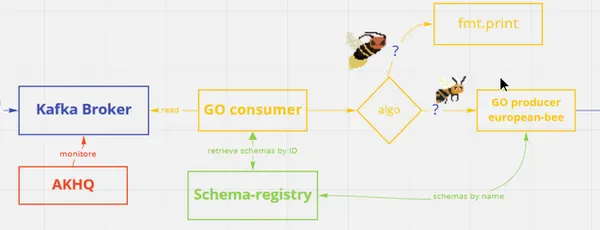

We also have a consumer that read the messages from the topic detected

On the AKHQ topics page, this is the consumer lag (How many messages the consumer still have to read).

He decode the message and obtain the schema ID, now he knows what's the expected format and can construct the object

Once we have it, we can try to guess wich kind on insect it is, in this scenario, if it's an european-bee, we publish it to the topic eurpean-bee otherwise we just write an alert on the console

Ok, now we would like to store this bees in a database to make statistic. But is there something quick ? kafka-connect

Kafka-connect

start the services

docker-compose up kafka-connect

we stock the data in a postgres database

docker-compose up -d postgres

docker-compose up -d adminer

We need to post a configuration TODO postman collection

post KAFKA_CONNECT_URL/connectors/CONFIG_NAME/config { YOUR_CONFIG }

visit Adminer

localhost:8080

on authentification page enter the informations from the docker-compose

System :Postgres

Server :postgres

User :beekeeper

Password :beepassword

Database :bees

You should see all your bees stored an available for any SQL request